Unified data preparation and model training with Amazon SageMaker Data Wrangler

[June Updates]

On 9 June 2022 Amazon Web Services announced the general availability of data partitioning in training and test divisions with Amazon SageMaker Data Wrangler.

The fastest and easiest way to prepare data for machine learning.

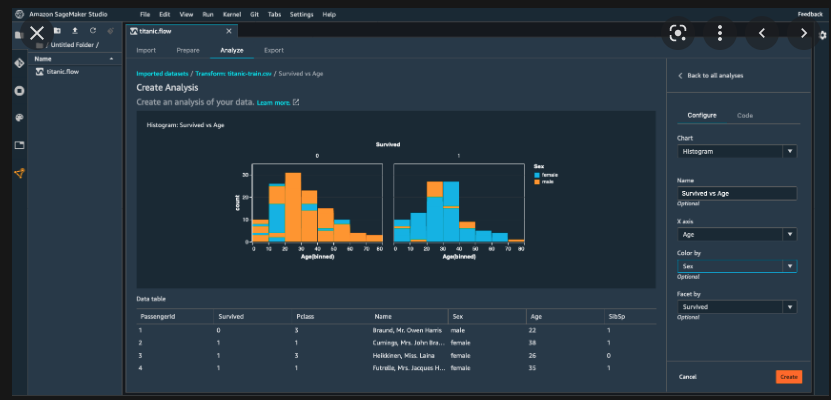

Amazon SageMaker Data Wrangler reduces the time it takes to aggregate and prepare data for machine learning (ML) from weeks to minutes. With SageMaker Data Wrangler, we can simplify the process of data preparation and feature engineering, and complete each step of the data preparation workflow, including data selection, cleansing, exploration, and visualization from a single visual interface. With SageMaker Data Wrangler’s data selection tool, we can quickly select data from multiple data sources, such as Amazon S3, Amazon Athena, Amazon Redshift, AWS Lake Formation, Snowflake, and Databricks Delta Lake.

Since June 9th we can split our customer data into training and test sets in just a few clicks with Data Wrangler. Previously data scientists had to write code to split their data into train and test sets before training ML models. With SageMaker Data Wrangler’s new train-test split transform, we can now split your data into train, test, and validation sets for use in downstream model training and validation. SageMaker Data Wrangler also provides various types of splits including: randomized, ordered, stratified, and key-based splits along with the option to specify how much data should go in each split.

This allows us to improve the service we offer to companies and organisations. Because, as aws and our technicians have found, if we create a random division of data into a training set and a test set, we can train a machine learning model on the training set and then evaluate its machine learning model on the test set. Evaluating the model on data seen during the training can be biased, thus setting test data aside prior to training is crucial. As a result, evaluating model accuracy on the test set data provides a real-world estimate of model performance.

If you want to know more about the new features, read the aws blog and consult the aws documentation.