LLOps: MLOps for Large Language Models

LLOps is a branch of MLOps focused on infrastructure and tools for creating and deploying LLMs. It addresses the lifecycle of LLMs, including training, evaluation, fine-tuning, deployment, monitoring, and maintenance.

These are essential practices for automating the efficient implementation of large-scale language models, ensuring their smooth operation in products and services.

LLOps, or Management and Operations of Large-Scale Language Models, automates the management of advanced models like GPT-3 and GPT-4 in production environments for natural language processing applications. Its importance lies in its role across various industries, from software development to fraud detection and customer service, simplifying tasks and reducing operational costs.

What is LLOps and How Does It Work?

LLOps automates the development of artificial intelligence with expansive language models. Its key components are:

-

- Base model selection.

- Data management.

- Model deployment and monitoring.

- Evaluation and comparison.

Incorporating LLOps into technological solutions offers advantages such as:

Agile and Real-Time Updates

Thanks to LLOps, we can carry out updates and improvements to our language models effectively, keeping our services always up-to-date and competitive.

Enhanced Reliability

Automation ensures a consistent and error-free implementation of language models, reducing the likelihood of production issues and increasing the reliability of our services.

Integration with DataOps

They can seamlessly integrate with DataOps practices, facilitating a smooth data flow from ingestion to model implementation. This integration promotes data-driven decision-making and accelerates value delivery.

Faster Iteration and Feedback Cycles

They can seamlessly integrate with DataOps practices, facilitating a smooth data flow from ingestion to model implementation. This integration promotes data-driven decision-making and accelerates value delivery.

Simplified Collaboration

They can seamlessly integrate with DataOps practices, facilitating a smooth data flow from ingestion to model implementation. This integration promotes data-driven decision-making and accelerates value delivery.

Improved Security and Privacy

They can seamlessly integrate with DataOps practices, facilitating a smooth data flow from ingestion to model implementation. This integration promotes data-driven decision-making and accelerates value delivery.

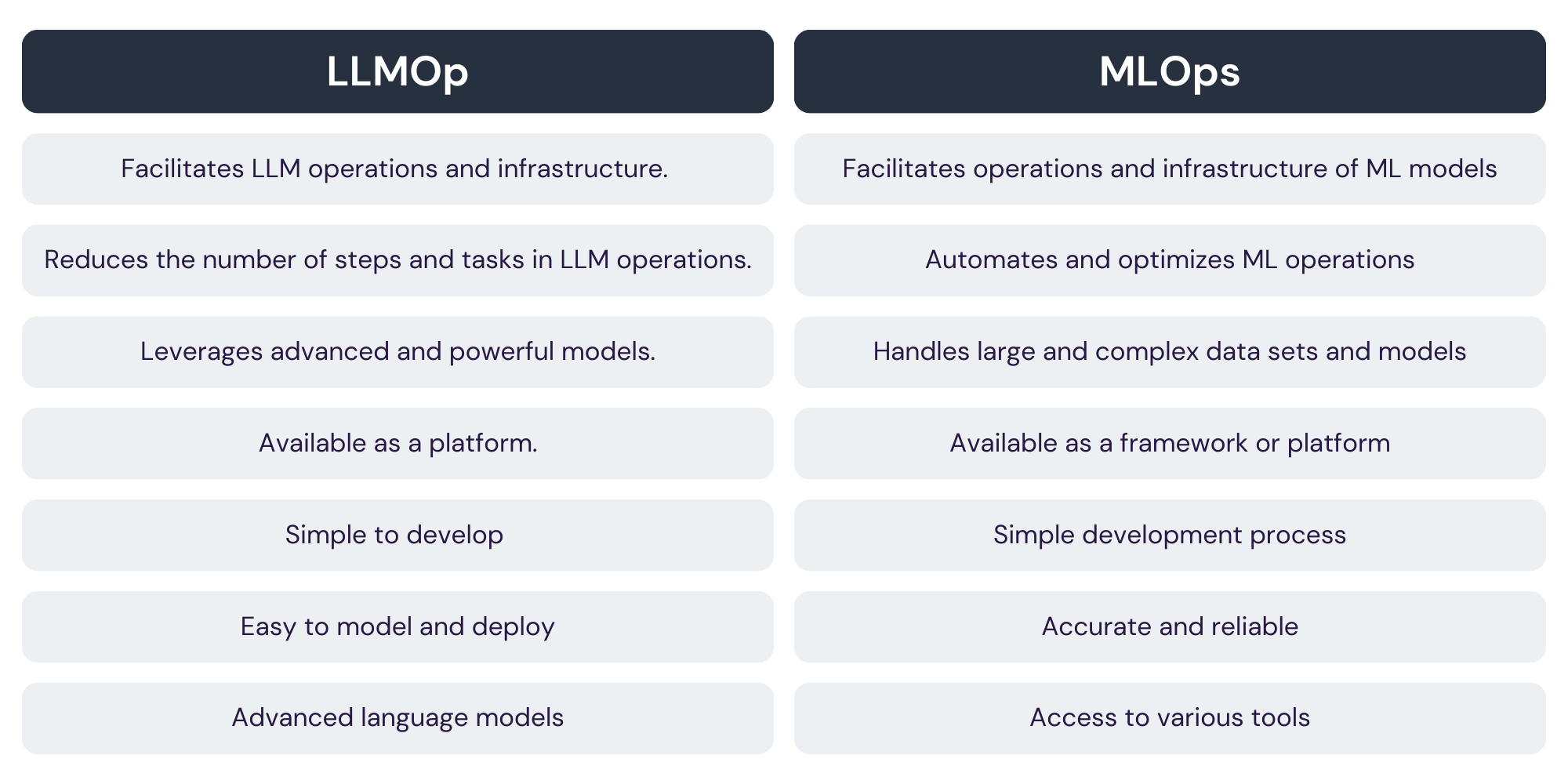

LLOps and MLOps

Both approaches coexist in artificial intelligence and machine learning, but LLMOps focuses on large language models, while MLOps encompasses a broader range of machine learning models.

The choice between MLOps and LLOps depends on your goals, the nature of your projects, available resources, and team experience. If you are focusing on the efficiency of machine learning model implementation, MLOps is suitable. However, if you are working on natural language processing tasks, LLOps is relevant, especially in industries like content generation, chatbots, and virtual assistants. Additionally, keep in mind that some projects may require a combination of both approaches, as there is no strict division between MLOps and LLOps.

Real-World Uses of LLOps

The need for LLOps arises from the potential of language models to revolutionize AI development. Although these models possess immense capabilities, their effective integration requires sophisticated strategies to handle complexity, foster innovation, and ensure ethical use. In business applications, LLOps is shaping various industries.

Content Generation

Use of linguistic models to automate the creation of content such as summaries or opinion analysis.

Customer Support

Enhancing chatbots and virtual assistants with language model capabilities.

Data Analysis

Extracting information from textual data to enrich decision-making processes.